Ever wondered if machines could actually think like humans do?

What Is AGI (Artificial General Intelligence)?

It would demonstrate similar adaptability.

The term “Artificial General Intelligence” was popularized in 2002 by AI researchers Shane Legg and Ben Goertzel, however the concept has been essential to AI research since its earliest days. Other words commonly used interchangeably include “strong AI,” “human-level AI,” and “general intelligent action,” however each bears slightly different philosophical connotations.

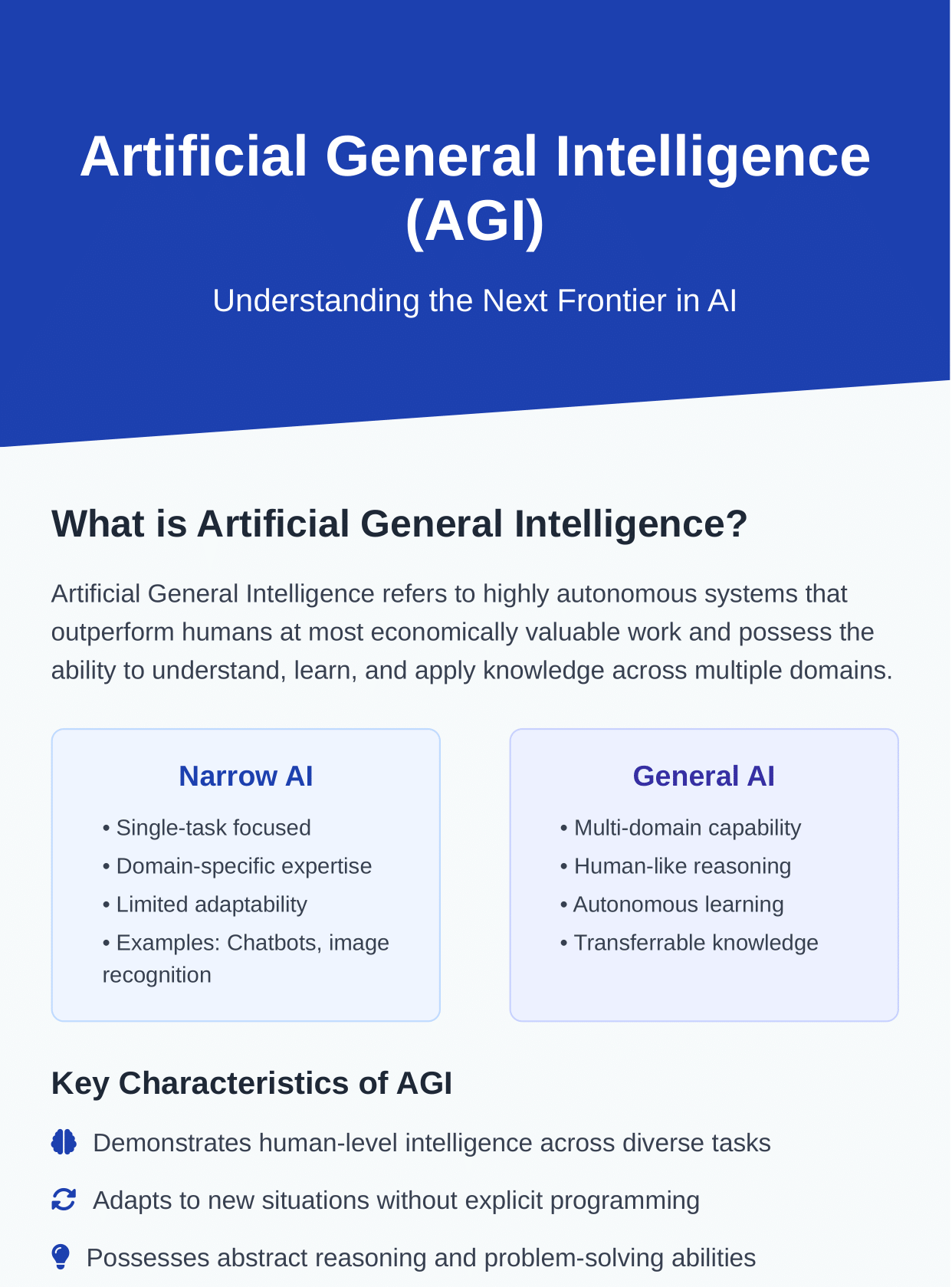

AGI vs. Narrow AI vs. Artificial Superintelligence

To comprehend it properly, we need to put it within the broader spectrum of artificial intelligence capabilities→

Narrow AI (ANI)→ Where We Are Today

- Voice assistants like Siri and Alexa

- Image recognition systems in smartphones

- Chess algorithms like Deep Blue

- Self-driving car navigation systems

- Recommendation algorithms for streaming platforms

While amazing in their domains, these systems cannot transfer their learning to various tasks. Your chess software can’t suddenly help diagnose medical issues, and your voice assistant can’t learn to drive a car without considerable reprogramming.

Artificial General Intelligence→ The Next Frontier

AGI represents a quantum leap beyond narrow AI. This system would possess→

- The ability to learn any intellectual work that a human can

- Transfer learning between different domains

- General problem-solving ability

- Understanding of abstract concepts

- Adaptive reasoning across unexpected settings

Most crucially, it would have what AI researchers call “generality”—the potential to apply intellect broadly rather than within restricted bounds.

Artificial Superintelligence (ASI)→ Beyond Human Capability

If it reflects human-equivalent intellect, Artificial Superintelligence (ASI) would exceed it. As Oxford philosopher Nick Bostrom defines it, ASI would be “an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills.” This imagined level of intelligence might potentially solve issues that human minds cannot fathom, advancing science, health, and technology at unprecedented rates—while potentially asking existential questions about humanity’s position in a world with better artificial minds.

Key Characteristics of AGI

What capabilities would a true AGI system need to demonstrate? While definitions differ across studies, certain key qualities are frequently identified→

Versatility and Adaptability

True AGI would need to function well across many fields, from mathematical thinking to creative writing, strategic planning to emotional understanding. Unlike today’s specialized systems, it would be able to navigate unfamiliar environments and tasks without requiring retraining or reprogramming.

Self-Improvement and Learning

This self-improvement capability makes it particularly interesting—and potentially concerning.

An AGI system might theoretically begin a loop of recursive self-improvement, leading to rapidly accelerating capabilities.

Common Sense Reasoning

One of the most challenging features of human intelligence to replicate is what we casually term “common sense”—the implicit understanding of how the world works that doesn’t require explicit training.

Current AI systems lack true comprehension of basic physical principles, social dynamics, and causal relationships that humans intuitively perceive. It would need to learn this form of thinking to function well in real-world contexts.

Creativity and Problem-Solving

Human intelligence isn’t simply about processing information—it’s about developing unique insights and innovative solutions. It would need to display actual creativity, inventing new ways to problems rather than simply optimizing current methods.

This could involve scientific discovery, creative expression, or inventive problem-solving in disciplines like engineering or medicine.

Natural Language Understanding

The Path to AGI→ Historical Perspective

The Birth of AI (1950s-1960s)

The formal beginning of AI as a field is commonly dated to a summer workshop at Dartmouth College in 1956, where pioneering computer scientists like John McCarthy, Marvin Minsky, and Herbert Simon first introduced the phrase “artificial intelligence” and stated lofty ambitions.

These early pioneers were remarkably hopeful.

The AI Winters (1970s-1980s)

This led to what’s known as the first “AI winter”—a time of reduced funding and interest in AI research. A similar cycle occurred in the late 1980s when Japan’s ambitious Fifth Generation Computer Project failed to accomplish its AGI-oriented aims.

The Rise of Applied AI (1990s-2000s)

This era provided real-world applications including speech recognition, recommendation algorithms, and expert systems that could do specialized tasks effectively, yet they lacked the universal capabilities that early AI pioneers had envisioned.

Deep Learning Revolution (2010s-Present)

Current State of AGI Research

Major Players in AGI Development

Several groups stand at the forefront of AGI research→

- OpenAI→ Founded with the specific purpose of generating safe AGI, OpenAI has built increasingly powerful models like GPT-4, which some academics think displays early hints of AGI-like capabilities.

- Google DeepMind→ Combining DeepMind’s reinforcement learning skills with Google’s resources, DeepMind focuses on building AI with general problem-solving ability.

- Anthropic→ Founded by former OpenAI researchers, Anthropic is exploring “Constitutional AI” approaches that aim to construct AI systems that are both powerful and aligned with human ideals.

- Meta AI→ Meta (previously Facebook) has invested extensively in AI research, including constructing big language models and researching multimodal AI systems.

- xAI→ Elon Musk’s new AI startup is developing advanced models like Grok 4, which according to some accounts displays considerable gains in reasoning ability.

Recent Breakthroughs

Several recent advances have accelerated progress toward AGI-like capabilities→

- Large Language Models (LLMs)→ Models like GPT-4 and Claude have showed extraordinary language abilities, including complex reasoning, coding, and cross-domain knowledge.

- Multimodal AI→ Systems that can handle various sorts of data—text, graphics, audio, video—are displaying more integrated comprehension of information.

- Reasoning-First Approaches→ Models like OpenAI’s o1 reveal a new paradigm where AI systems “think before they respond,” improving problem-solving capabilities.

- Self-Supervised Learning→ Modern AI systems can learn from enormous volumes of unlabeled data, extracting patterns without human assistance.

- Reinforcement Learning from Human Feedback (RLHF)→ This technique allows AI models to learn from human preferences, helping align their outputs with human expectations.

Emerging Research Directions

Several interesting research directions may help to AGI development→

- Brain-Inspired Computing→ Some academics are building neural network topologies that more closely imitate the organization of the human brain.

- Embodied AI→ This approach emphasizes the necessity of bodily engagement with the world for developing intelligence.

- Hybrid Systems→ Combining neural networks with symbolic reasoning systems may generate more resilient general intelligence.

- Open-Ended Learning→ Creating systems that can continuously learn and innovate without specified goals, comparable to biological evolution.

Tests for Confirming Human-Level AGI

How would we know when we’ve attained AGI? Researchers have offered different benchmarks and tests→

The Turing Test

Recent testing reveals that advanced models like GPT-4 can be identified as human around 54% of the time in Turing Tests—approaching but not yet matching human performance (67%).

The Robot College Student Test

The Employment Test

The Coffee Test

The Modern Turing Test

A modern benchmark created by AI researcher Mustafa Suleyman challenges an AI to turn $100,000 into $1 million, demonstrating its ability to navigate complicated real-world systems and make strategic judgments.

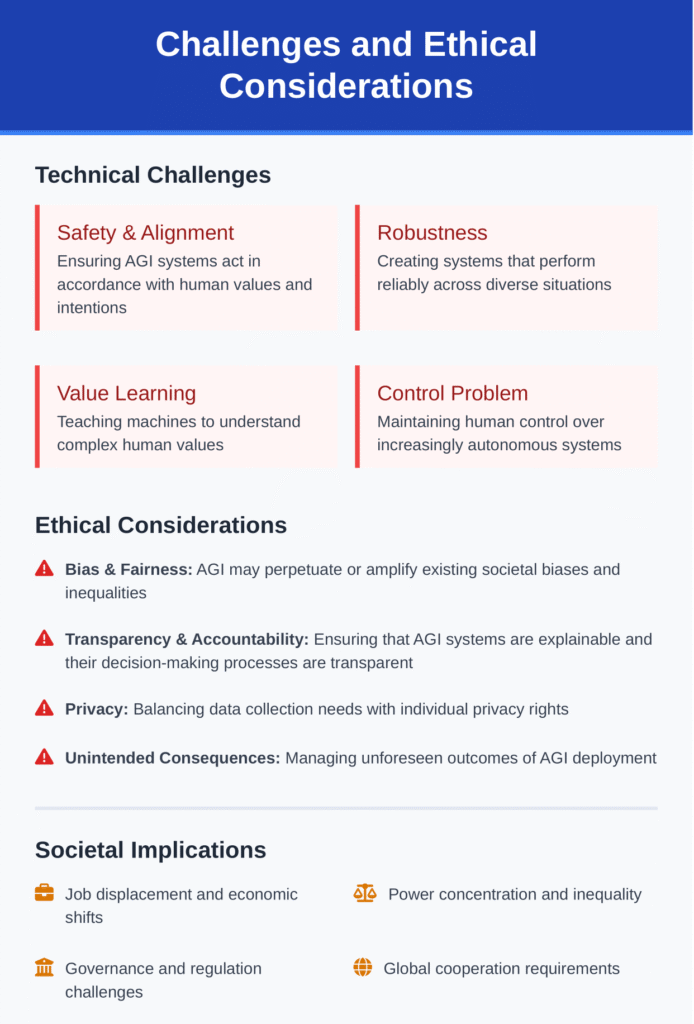

Challenges in Developing AGI

Despite great advances in AI capabilities, several key barriers exist between present systems and real AGI→

Common Sense Reasoning

Perhaps the most enduring difficulty is constructing AI systems that understand the world as people do—with intuitive understanding about physics, social dynamics, and causal links that doesn’t require explicit programming.

As Meta’s Chief AI Scientist Yann LeCun explains, today’s most advanced AI systems “lack common sense” and can’t consider before they act in the way humans naturally do. Bridging this gap remains a fundamental research challenge.

Transfer Learning

Developing more flexible transfer learning capacities is key for generating fully adaptable intelligence.

Interpretability and Explainability

Physical Embodiment

Some researchers claim that actual intellect requires physical connection with the world. While disembodied language models demonstrate excellent cognitive capacities, they lack the grounded understanding that comes from traversing actual reality.

Consciousness and Self-Awareness

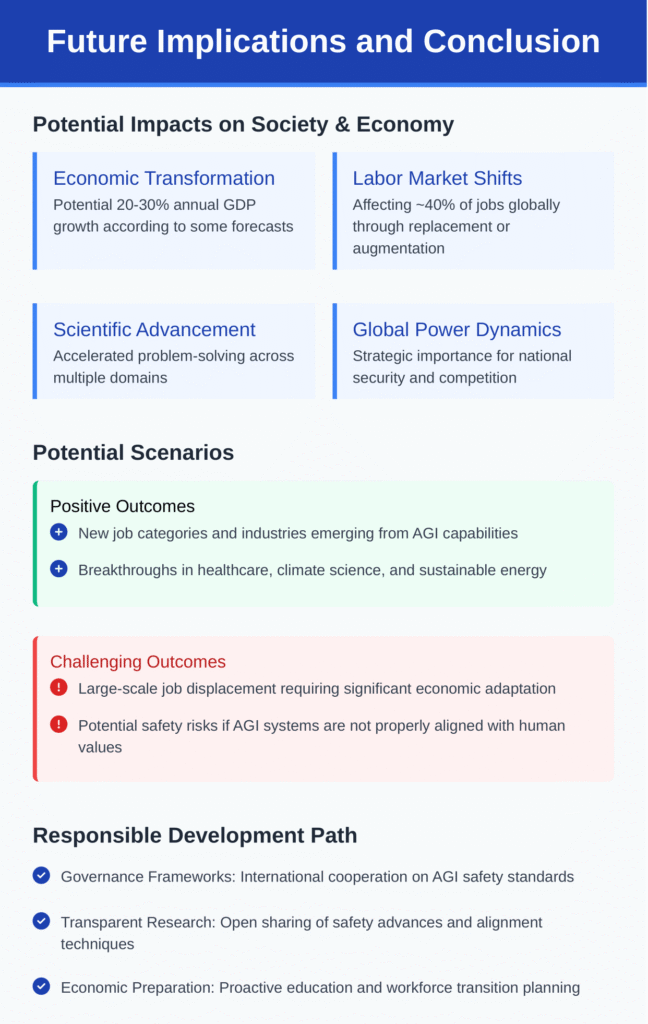

Potential Benefits and Applications: Impact of AGI

If AGI becomes reality, its potential uses could change practically every element of human society→

Scientific Discovery

Imagine an AGI studying thousands of potential molecular compounds to uncover new antibiotics or inventing novel materials with finely tailored features for sustainable energy production.

Healthcare Revolution

Such platforms could democratize access to high-quality medical expertise, offering accurate diagnosis and treatment suggestions even in locations with poor healthcare infrastructure.

Education Transformation

Economic Productivity

It might drastically enhance economic production by automating complicated cognitive tasks and increasing human skills across multiple industries. From legal research to software creation, marketing to financial planning, it could function as a force multiplier for human productivity.

Solving Global Challenges

Ethical Implications and Risks

The emergence of AGI involves important ethical problems that academics, governments, and society must cope with→

Job Displacement

This raises problems about economic transition, retraining, and perhaps fundamental adjustments to economic structures to assure universal prosperity in an AGI-enabled economy.

Security Risks

Alignment Problem

Perhaps the most fundamental difficulty is ensuring that this systems adopt goals and values associated with human wellbeing. This “alignment problem” is essential to AGI safety research—how do we assure that massively powerful systems operate in ways beneficial to humanity?

Concentration of Power

Existential Risk

Is AGI Already Here? → The Ongoing Debate

One of the most contested problems in AI research today is whether present systems are nearing or even attaining early versions of it→

The Case for Early AGI

Some academics think that today’s most advanced models already display significant AGI-like capabilities→

The Case Against Current AGI

Other academics remain unconvinced, pointing to basic shortcomings in present systems→

Redefining Intelligence

This discussion underscores fundamental questions about how we define intelligence itself. Is intelligence primarily about language processing and pattern recognition? Does it involve bodily interaction with the physical world? Must it contain consciousness or subjective experience?

As AI capabilities continue increasing, these philosophical questions regarding the nature of intelligence take on practical implications for how we evaluate progress toward AGI.

Timeline Predictions→ When Will AGI Arrive?

When might we anticipate AGI to become reality? Expert perspectives differ dramatically→

Expert Surveys

Surveys of AI researchers have showed diminishing timelines for AGI arrival→

This acceleration in expectations reflects the rapid growth of AI capabilities in recent years, notably with big language models and multimodal systems.

Optimistic Predictions

Some major experts in AI research expect relatively near-term timelines→

- Demis Hassabis (Google DeepMind)→ In 2023, said AGI may appear within “a decade or even a few years.”

- Jensen Huang (NVIDIA CEO)→ In 2024, forecasted that within five years, AI would pass any test at least as well as humans.

- Leopold Aschenbrenner (former OpenAI researcher)→ In 2024, assessed AGI by 2027 to be “strikingly plausible.”

- Geoffrey Hinton (AI pioneer)→ In 2024, projected 5-20 years before systems smarter than humans.

Skeptical Perspectives

Others are more wary regarding AGI timelines→

- Paul Allen (Microsoft co-founder)→ Before his death, stated that it would require “unforeseeable and fundamentally unpredictable breakthroughs” improbable in the 21st century.

- Alan Winfield (roboticist)→ Compared the distance between present AI and AGI to the difference between current space flight and faster-than-light travel.

- Gary Marcus (AI researcher)→ Consistently emphasizes that existing techniques confront fundamental constraints that will require important conceptual breakthroughs.

The Difficulty of Prediction

As AI researcher Max Roser demonstrates, specialists in various domains often struggle to effectively foresee changes in their own subjects. The Wright brothers famously declared they felt human flight was 50 years away—just two years before they achieved it themselves.

Preparing for an AGI Future

Regardless of exact dates, the possible development of AGI necessitates serious preparation→

Research Priorities

Several research areas will be crucial for ensuring beneficial AGI development→

- AI Safety→ Developing technical techniques to ensure AGI systems remain aligned with human values and purposes.

- AI Governance→ Creating institutional structures and rules for responsible AGI development and deployment.

- Human-AI Collaboration→ Exploring how people and increasingly sophisticated AI systems may work together most effectively.

- Economic Transition→ Understanding and preparing for economic changes that it might trigger.

International Cooperation

The global nature of AGI research needs international coordination. Initiatives like the Frontier Model Forum, which brings together major AI businesses to discuss safety information, demonstrate early initiatives at collaborative governance.

As Ilya Sutskever emphasized in his TED talk→ “What I claim will happen is that as people experience what AI can do… this will change the way we see AI and AGI, and this will change collective behavior.”

Individual Preparation

Even as corporations and governments prepare for AGI, individuals can take efforts to adapt to an increasingly AI-driven future→

- Developing talents that complement rather than compete with AI capabilities

- Understanding the foundations of AI to engage effectively with these technologies

- Participating in conversations regarding how AI should be managed and deployed

Maintaining Perspective

As we traverse this uncertain future, maintaining human values and wellness at the center of AGI development will be critical to ensuring these powerful technologies serve humanity’s best interests.

Conclusion

The path to AGI entails deep technical obstacles, from common sense thinking to transfer learning to congruence with human values. Yet progress continues to speed, with each breakthrough bringing us closer to this transformative technology.

What are your thoughts on AGI? Do you feel we’re approaching this technical milestone, or do considerable difficulties remain? Share your perspective in the comments below.

1. What is AGI vs AI?

AI (Artificial Intelligence) refers to machines developed to do certain tasks like image recognition, translation, or conversation. AGI (Artificial General Intelligence) is a potential kind of AI that can accomplish every intellectual work a person can, with reasoning, learning, and adaptation across disciplines.

2. Is ChatGPT AGI or AI?

ChatGPT is AI, not AGI. It’s a sophisticated language model taught to generate human-like prose, but it doesn’t understand or think like people across all circumstances.

3. What does AGI mean?

AGI stands for Artificial broad Intelligence, which refers to robots that possess broad human-level cognitive abilities, including thinking, problem-solving, and learning across any task.

4. What does AGI mean for ChatGPT?

If ChatGPT were AGI, it would perceive context like a human, learn new skills on the fly, and think critically. Currently, it’s a specialized AI, not real AGI.

5. Is ChatGPT 4.0 AGI?

No, GPT-4 is not AGI. It’s a powerful AI model with outstanding linguistic skills, but it lacks deep comprehension, emotion, and general reasoning like a person.

6. What is the entire form of AGI in AI?

The complete form is Artificial General Intelligence — AI that’s capable of comprehending, learning, and completing any intellectual work a person can do.

7. Is Grok 4 AGI?

Nope. Grok 4 by xAI is still a sort of narrow AI, just like ChatGPT. It’s powerful in discussion but not capable of actual universal intelligence.

8. Is there any AI that is AGI?

As of today, no AI has attained AGI. All existing systems (ChatGPT, Grok, Gemini, Claude, etc.) are narrow AI–they excel in specialized tasks but lack true general intelligence.